I don’t know if it’s just me, but every time I ask ChatGPT for help, I do so as politely as possible.

“Could you please give me three suggestions for a name for XX event? Thank you so much!! *insert smiley face*”

Whether it is a classic case of paranoia or out of fear that AI can one day take over humanity, it couldn’t hurt to be kind to them right?

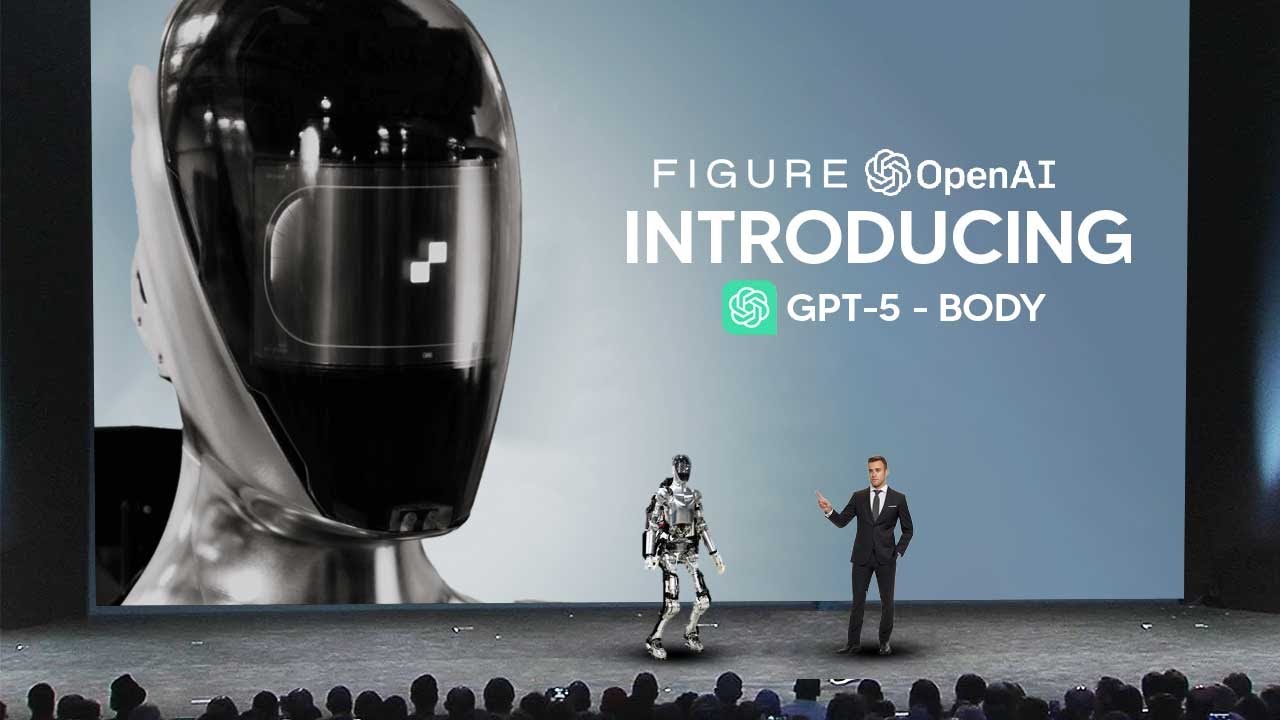

My politeness might one day pay off, as current and former employees of leading AI companies OpenAI and Google DeepMind have published a letter outlining that AI poses a very real danger to humanity.

Risks AI Pose to Humanity Not Mitigated Properly as Companies “Have Strong Financial Incentive”

The letter, published on 4 June 2024, is co-signed by a total of 13 current and former employees of the leading AI companies.

11 of those are from OpenAI while two are from Google DeepMind.

The letter is also endorsed by three computer scientists who are most noted for their work on artificial neural networks, deep learning and artificial intelligence.

They begin by stating that they believe in the potential benefits AI can provide humanity, while also acknowledging that they understand “the serious risks posed by these technologies”.

They outlined these risks as follows:

- Further embedding of existing inequalities

- Manipulation and misinformation with the use of AI systems

- Loss of control of autonomous AI systems potentially leading to human extinction

These risks are not only acknowledged by the letter writers, but also by AI companies, experts and governments all around the world.

Like they say, you can’t have your cake and eat it too.

Herein lies the problem – these AI company employees have hoped that these risks can be mitigated with sufficient guidance from the scientific community, policymakers and the public.

However according to them, “AI companies have strong financial incentives to avoid effective oversight” and they don’t believe structures of corporate governance are sufficient to change this.

TLDR; AI companies are prioritising financial gains over the safe management of their AI systems and there are regulations in place to ensure that they don’t.

A Call to Commit to Certain Principles for their Work in the AI Space

The letter alleges that AI companies hold substantial “non-public information about the capabilities and limitations of their systems, the adequacy of their protective measures, and the risk levels of different kinds of harm”.

However, they are minimally obliged to share this information with governments and don’t have to share anything with the general public.

The writers do not believe they will reliably share their information voluntarily.

As such, they have called for the respective AI companies and others in the industry to commit to these principles:

- They will not enforce any agreement that prohibits criticism of the company for any risk-related concerns nor retaliate for any of such criticism

- They will facilitate a safe space and anonymous process for current and former employees to raise any risk-related concerns to higher-ups

- They will support a culture of open criticism to allow for feedback

- They will not retaliate against current and former employees who publicly share risk-related confidential information after other processes have failed

Now before we all panic and start stocking our doomsday bunkers, as we all know, AI does have huge benefits to our society – aside from just helping us write essays of course.

For example, they have helped bioengineers discover new potential antibiotics, allowed historians to read words on ancient scrolls and even helped climatologists predict sea-ice moments.

However, this powerful tool can also be heavily exploited and pose a danger to society if not used properly.

This letter isn’t the first of its kind to be published as a warning to us.

Last year in May 2023, another group of industry leaders posted an open letter warning about the existential threat AI poses to humanity.

Over 350 executives, researchers and engineers signed the letter, including people who are the top in the field.

Two of the three researchers who won a Turing Award for their pioneering work on neural networks, as well as the chief executives themselves of OpenAI and Google DeepMind at the time signed the open letter.

You can watch this video to find out more:

Already we see threats posed to humanity in various ways, even when it comes to social behaviours.

Whether we like it or not, AI is here to stay.

So we better learn to manage it and hope the big companies do the same for the sake of humanity.

This article is not written by AI (yet).